The first results of the transcription app are in!!!!

And boy are they fascinating. Absolutely fascinating.

For those who missed it, I am developing a personal-use application that uses the new OpenAI Whisper model to transcribe my dictated audio to see how accurate it is and how it compares to Dragon. If it is better, I will adopt it. If it’s not, I will keep it as a backup in the event Microsoft retires Dragon (which is possible).

I am not developing this for the community unfortunately due to some significant problems I will share below. Whisper’s not ready for the big time or the average consumer yet.

TECHNICAL ALERT—SEE SUMMARY BELOW IF YOU DON’T WANT THE NITTY-GRITTY

But anyway, the developer I am working with ran some initial tests last night. He hooked up to the Whisper API and ran benchmarks.

The Whisper model actually has 5 different models: tiny, base, small, medium, and large. Each one has a bigger dataset. The bigger the dataset, the better the transcription (in theory), but the longer it takes the model to run.

We tested a fiction sample and a nonfiction sample. This was not a scientific study by any means.

Here are results and some observations:

#1: First, I want to get one thing out of the way: Whisper produces very clean transcription. Very, very clean. But how clean depends on which model you’re using. I don’t believe that the folks at OpenAI intended for this model to be used for dictation audio, so using it in many respects is like fitting a square peg into a round hole, but it can be done.

Anyway, we tested the samples and got an output for the tiny, base, and small models. Each model a different transcription with a slightly different flavor (the medium and large take way too much time and RAM to run).

I went through each model’s transcription line by line, marking the number of errors. I divided the errors into two categories: ones that I could catch with my Microsoft Word macros, and ones that I could not. If I could catch the error with a macro, then I didn’t count it toward the total error count (because I can fix it). I only care about the errors that require actual human intervention & editing to fix.

As we progressed through each model, it became very clear that the number of errors went down considerably the bigger the model got. So, the “tiny” model was the worst performing of the bunch, the base was a little better, but the small was the best by a big margin. (Again, not scientific…)

The “small” model was the clear winner (fiction and nonfiction) for author transcription needs. Anything less than that and it’s not accurate enough. The bigger models take too much time and resources to run. So Small it is.

The fiction sample was 250 words, and there was only 1 error. That’s over 99% accurate.

The nonfiction sample was 700 words, and there were 6 errors. That’s also over 99% accurate.

The lower models had significantly more errors that performed worse than Dragon.

Whisper was inconsistent with proper nouns. Those represented most of the errors. (i.e. it transcribed “Microsoft office” and “nuance software”. Don’t even get me started on fictional character names.) But Dragon doesn’t exactly handle proper nouns well either. I already deal with proper nouns with my macro—it works quite well. When you subtract the proper noun errors, the dictation is nearly perfect. The nonfiction sample gets reduced down to only 1 error (from 6) and the fiction sample goes down to zero (from 1). (!!!)

Again, this is just one test. Every dictation session is going to produce some kind of error. No tool is truly perfect.

#2: Whisper takes significantly longer to run than Dragon. For a 5-minute long file, Dragon takes approximately 1 minute to transcribe. For the same file transcribed with the Small model, Whisper takes 3.5 minutes, so 3.5x longer. The “tiny” and “base” models are closer to Dragon speed-wise (again, I’m not being scientific here) but they produce more transcription errors. There is a very clear tradeoff here.

I want to caveat that YMMV depending on your computer specs so take these numbers with a grain of salt, but I think it’s pretty clear that Whisper will take longer for most people than Dragon.

#3: Whisper takes considerably more RAM to run than Dragon. Whisper took approximately 2.5 GB of RAM alone to process the transcription (Intel i7, 16GB of RAM—others’ mileage may vary depending on their setup). That’s enough to make a lot of people’s fans kick on and slow their computers down. Dragon requires 2GB of RAM to install, but I don’t think it uses that much when transcribing.

It’s worth pointing out that if your computer is too slow to run an app like this, you can rent a GPU through Google or Amazon and they can handle the processing for you. But 1) you’ll either have to wait a while, as their free GPUs are used by many people and wait times vary or 2) you’ll have to pay for a premium GPU service. It’s not expensive, but it would be an expense.

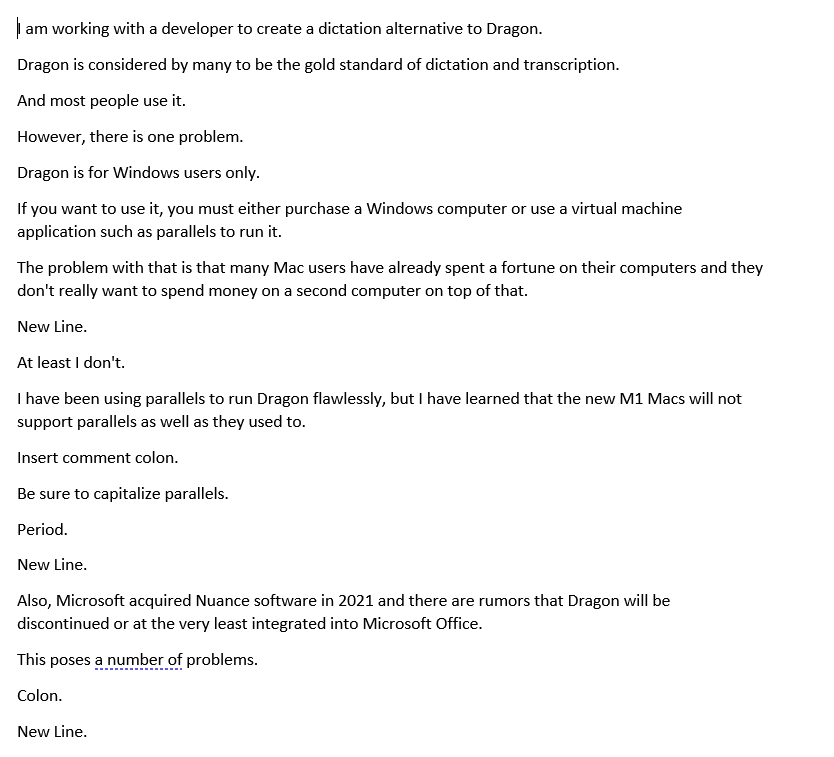

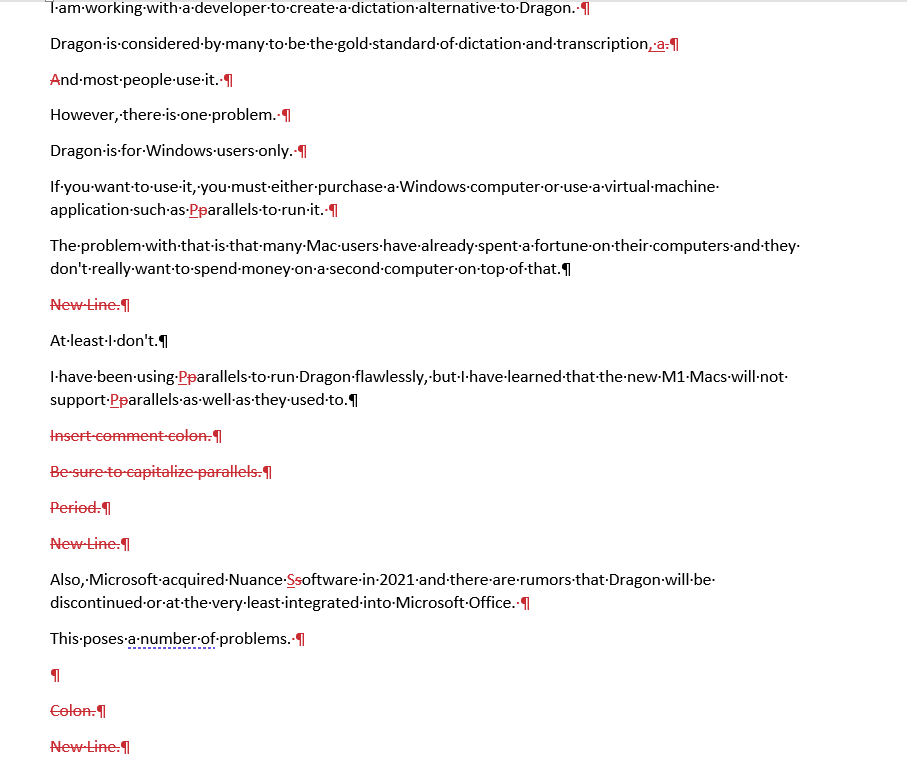

#4: Whisper’s results, while accurate, are very, very messy. See screenshot #1 below. There are a few things going on. First, Whisper automatically places commas and periods (very well), but it doesn’t understand parentheses, open/close quotes, “new line”, or other punctuation commands that Dragon users are used to. It also inserts returns in the middle of sentences, which is really frustrating. Therefore, it gets confused and makes a mess of the text. See screenshot #2 that represents the tracked changes I had to make to get it to be workable.

Screenshot #1:

The good news is that I can use my dictation Word macro to tighten up most if not all of this, but the bad news is that unless you know how to do this yourself (or how to hire someone to do it), Whisper will be largely unusable for authors for dictation until OpenAI updates the model to recognize punctuation commands. But…when/if they update the model to fix this……………..game-changer. In a big way.

#5: I am optimistic that I can combine this application with updates to my macro to get me somewhere around 99% accurate. The punctuation problems are a PITA but I think they’re fixable (I’ve dealt with worse, trust me). That said, I think my VBA guy who does my macros is going to hate me now. 🙂

PLAIN ENGLISH SUMMARY

So what does all of this mean?

- I don’t believe that the folks at OpenAI intended for the Whisper model to be used for dictation audio, so using it in many respects is like fitting a square peg into a round hole right, but it can be done.

- It is shockingly easy to use the Whisper API for transcription if you know Python. It’s almost scary how easy it is.

- Whisper’s accuracy is quite good at around 99%, but it does not handle punctuation outside of periods and commas well. In fact, its treatment of punctuation will be a nonstarter for many people until OpenAI updates the model. These problems can be addressed with Microsoft Word macros, but that requires some knowledge and know-how, which most folks probably don’t have.

- Whisper is just one update away from being amazing. The punctation problems could be very easily solved by the development team, and quickly too. If/when that happens, the application I’ve built will most definitely be usable, and for the first time, Mac users will have a viable solution for Dragon. Additionally, Whisper may be the only alternative in the future if Microsoft ever discontinues Dragon and/or changes it so that it is no longer useful to authors.

- Despite the issues I pointed out, Whisper IS a viable alternative to Dragon for transcription even though it is outside of the reach of most people. In my first limited test, it appears to be better. Given that Dragon is the gold standard in dictation/transcription, that is saying a lot. And I say that as someone who is a Dragon expert and has transcribed thousands of hours of dictated audio in Dragon over the years.

- It should also be pointed out that Word’s Editor, Grammarly, ProWritingAid, and PerfectIt in combination can catch many of the errors that Whisper creates, therefore getting you even cleaner results.

- I’m not going to turn into a Dragon basher. Dragon’s user experience is buttery smooth and it will be a while before Whisper can replicate that…if OpenAI wants to. They may not. In the meantime, if you have Dragon, continue using it and enjoy it until you can’t anymore.

- Whisper takes 3.5x longer to transcribe than Dragon and it is very resource-heavy. You have to have a lot of RAM and a decent processor. If you have an entry-level laptop computer, forget it. You need at least a middle-tier processor and somewhere between 16GB and 32GB of RAM.

- If you don’t have a computer that is strong enough to run Whisper, you’ll need to use a cloud GPU, which will either give you inconsistent processing times or will cost you. If you have no idea what I just said, then that underscores my point that Whisper is not yet ready for the big-time or average consumers.

Anyway, that’s all for now. I have a lot to digest, but I’m moving forward with the app.